ITDays Demo-Controlling robot and smart lamp

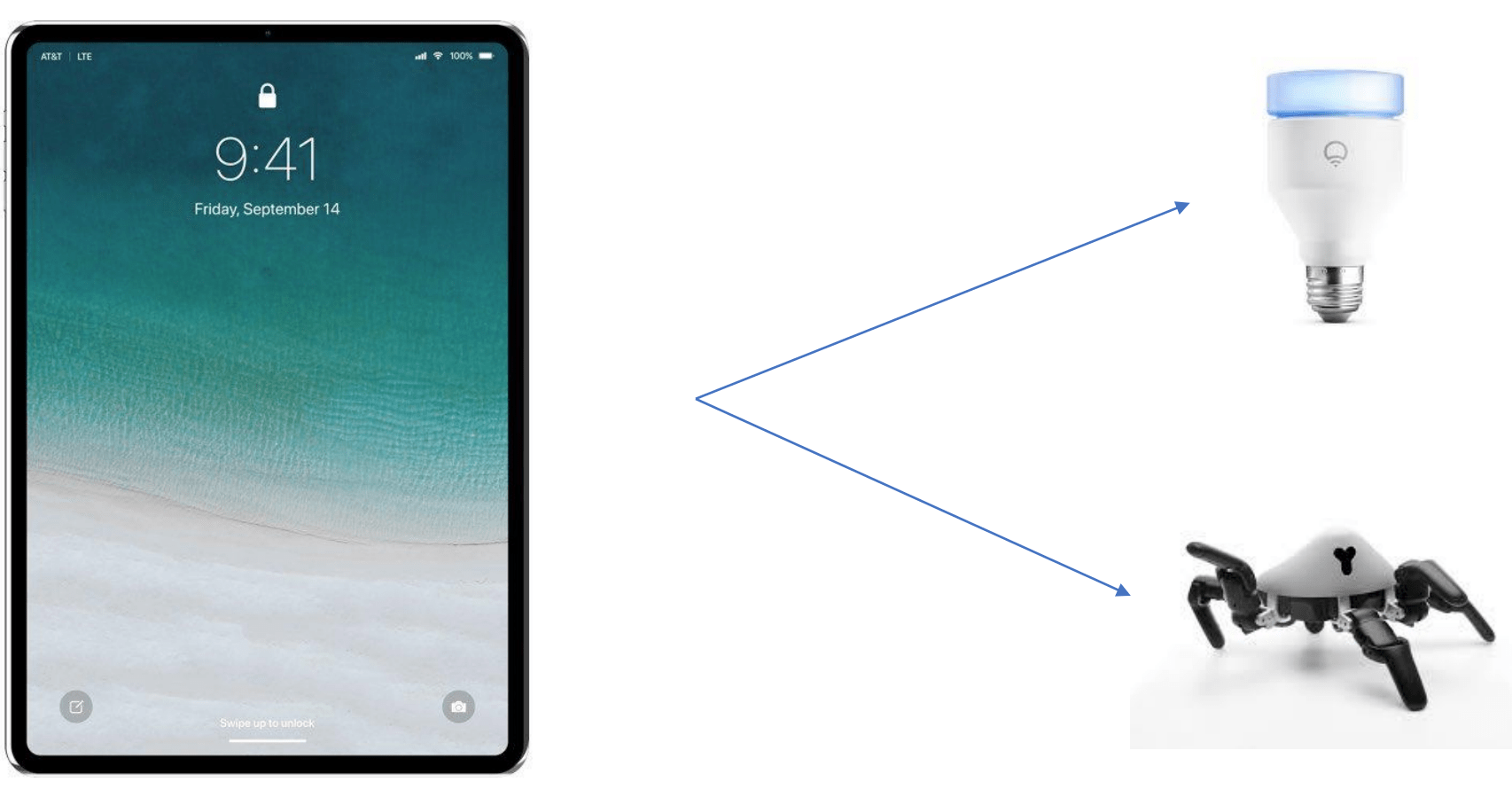

Connected devices, IOT are the main concepts behind this demo.

Using the camera from an IOS device, the user can point either to a smart lamp, or to the Hexa robot to invoke an action.

The smart lamp would simply turn on, but the robot is going to start writing IT DAYS

IOS

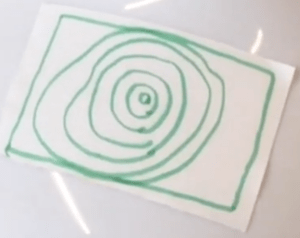

I have used ARCore for image and object detection.

These manually drawn patterns are used for image detection:

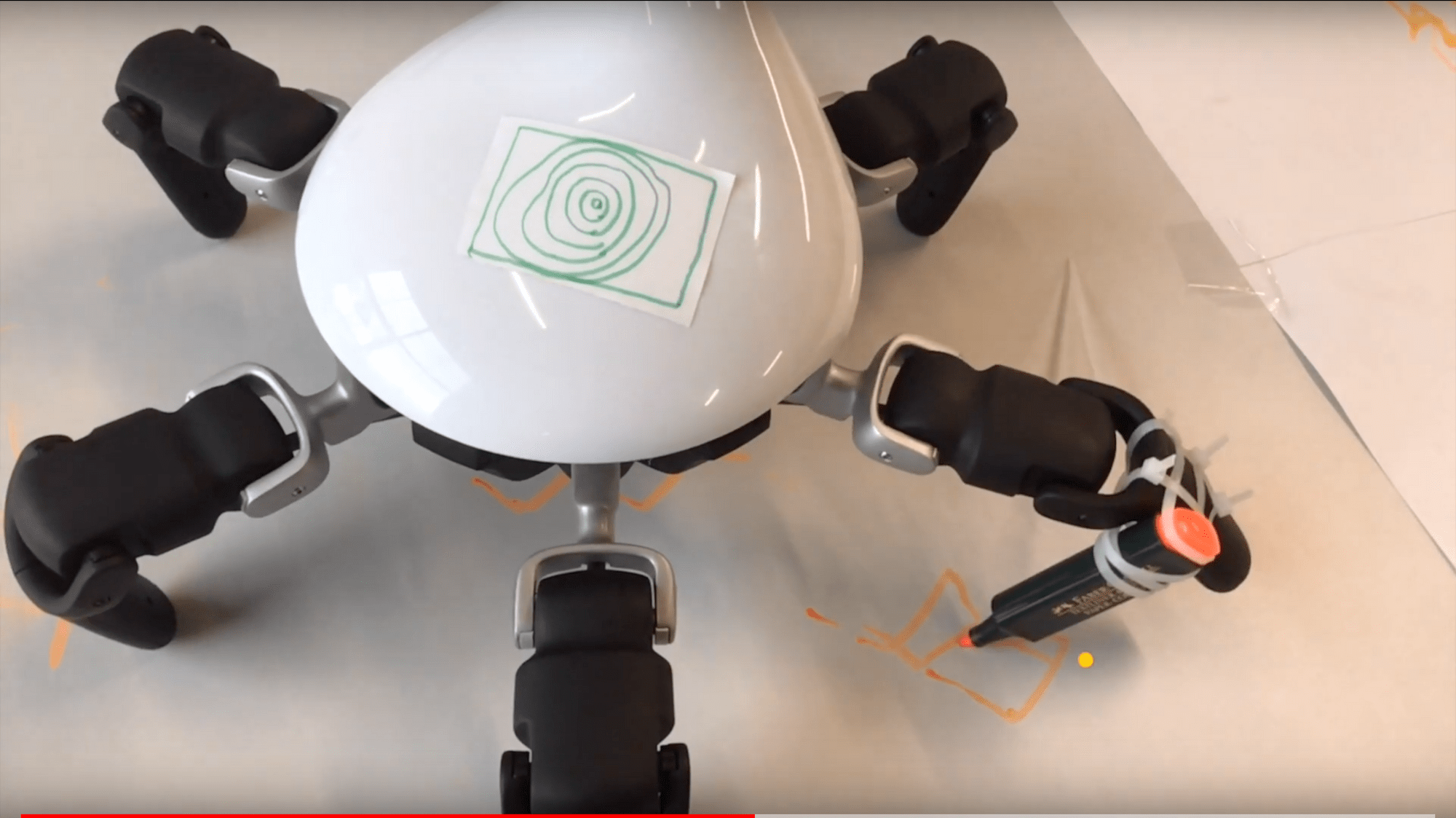

ROBOT

In order to control it, an API server is running on the robot, listening to a certain trigger (that comes from the tablet) to start writing a sentence. As an addition, there are also written a bunch of endpoints for manually controlling the robot (from a web interface)

The animations were made with a helper program called Hexa Simulator, and then imported as strings into the program

Technologies used

- GO

- Swift

- ArCore

- Apple vision API

Github

Project link and presentation link

The Project was realised for the conference ITDAYS.