WaterShed C++ Implementation

Since I heard of it, I have always wanted to manually implement WaterShed Algorithm and now I got the chance.

Because I wanted to create a UI and a skeleton that would allow me to easily test and implement multiple filters, I chose QT as a design framework and came with the following user interface:

Just need to select the picture (all taken from a predetermined folder), select the filter you want and voila.

The interface that every filter should respect is the following

class FilterInterface {

private:

string name;

public:

virtual Mat processImage(Mat image) = 0;

const string &getName() const {

return name;

}

FilterInterface(const string &name) : name(name) {}

};

#endif

Now, back to the algorithm...

What is Watershed?

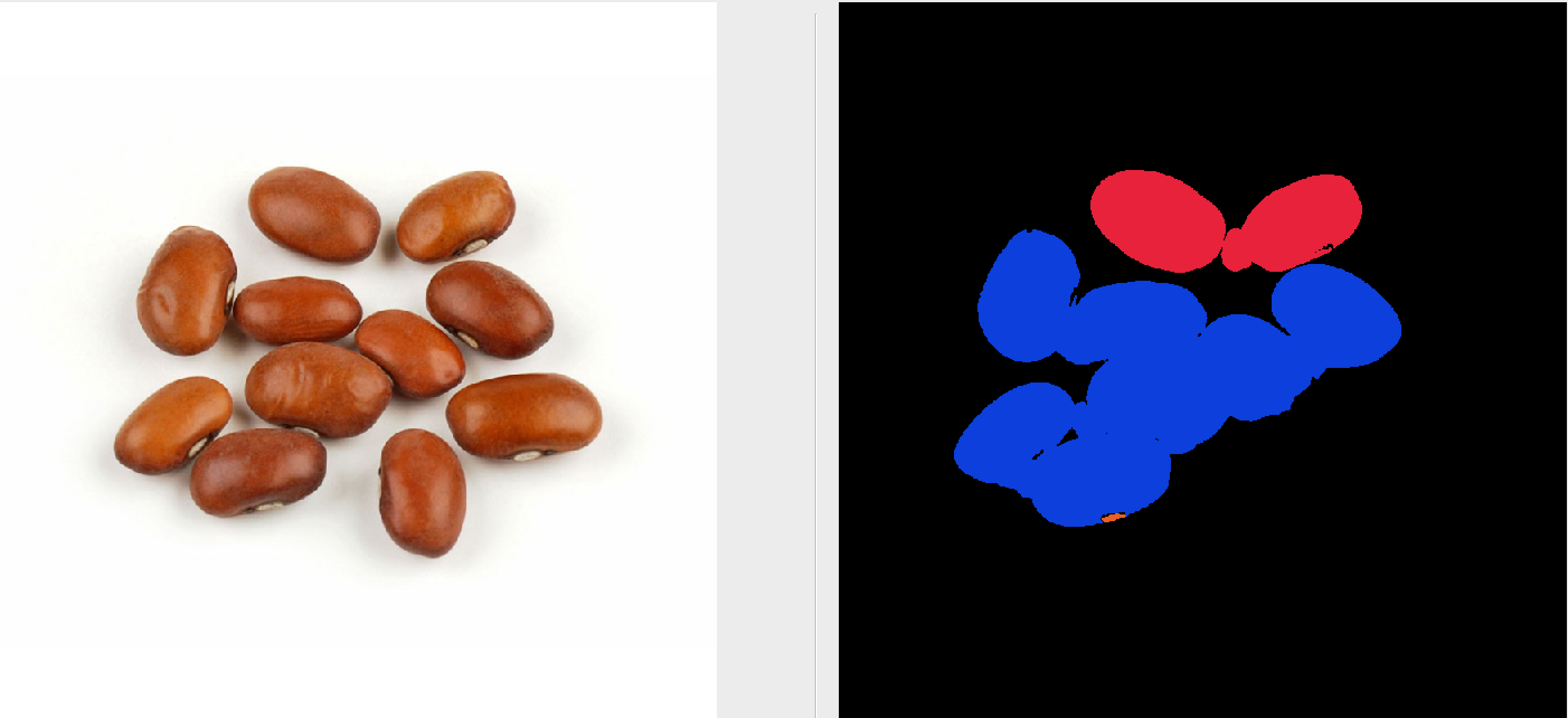

Watershed is a transformation on grayscale images. The aim of this technique is to segment the image. It works by treating the image as a topographic map (height map), based on the intensity of the pixels, and starting to fill some lower regions with water until two separate regions of water (lakes) merge, at exactly those coordinates there is placed a segmentation wall.

There are multiple steps that compose it:

- Make the image grayscale

- Apply a threshold

- Inverse the pixels

- Erode foreground

- Apply distance transform

- Compute the actual watershed algorithm

Let's take them one by one.

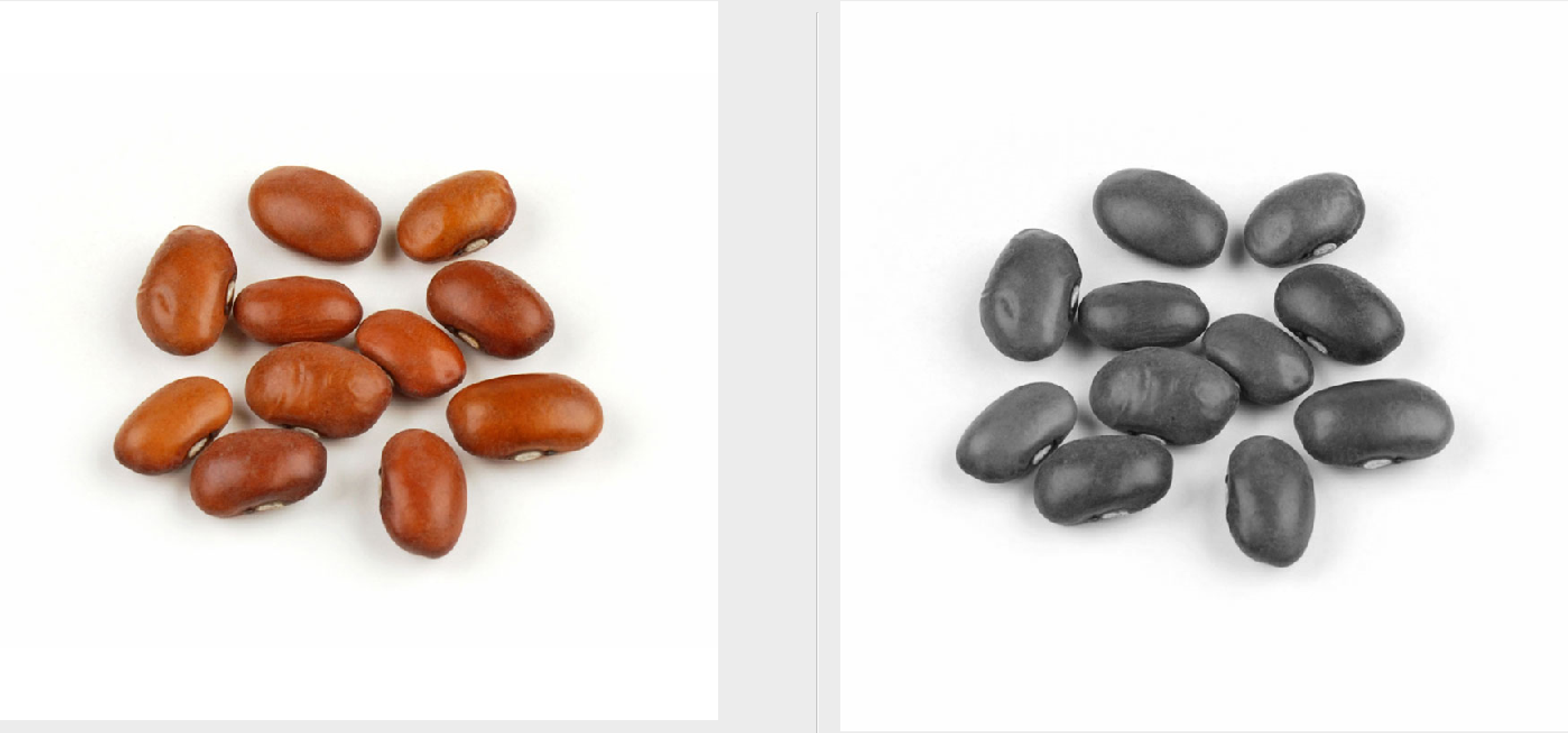

Making the image grayscale

Since I wanted to implement as much of the algorithm by myself, I found that in order to convert a color image to grayscale I can use this formula: 0.21 R + 0.72 G + 0.07 B. (approach being called the Luminosity Method).

So I just need to iterate through every pixel and compute the gray value.

Mat makeImageGrayScale(Mat image) {

Mat grayScale(image.rows, image.cols, CV_8UC1, Scalar(70));

for(int i = 0; i < image.rows; i++) {

for(int j = 0; j < image.cols; j++) {

double gray = 0.21 * image.at<cv::Vec3b>(i,j)[0] +

0.72 * image.at<cv::Vec3b>(i,j)[1] +

0.07 * image.at<cv::Vec3b>(i,j)[2];

grayScale.at<uchar>(i,j) = (uchar) gray;

}

}

return grayScale;

}

There are two other choices:

Lightness Method -> (max(R, G, B) + min(R, G, B)) / 2

Average Method -> (R + G + B) / 3.

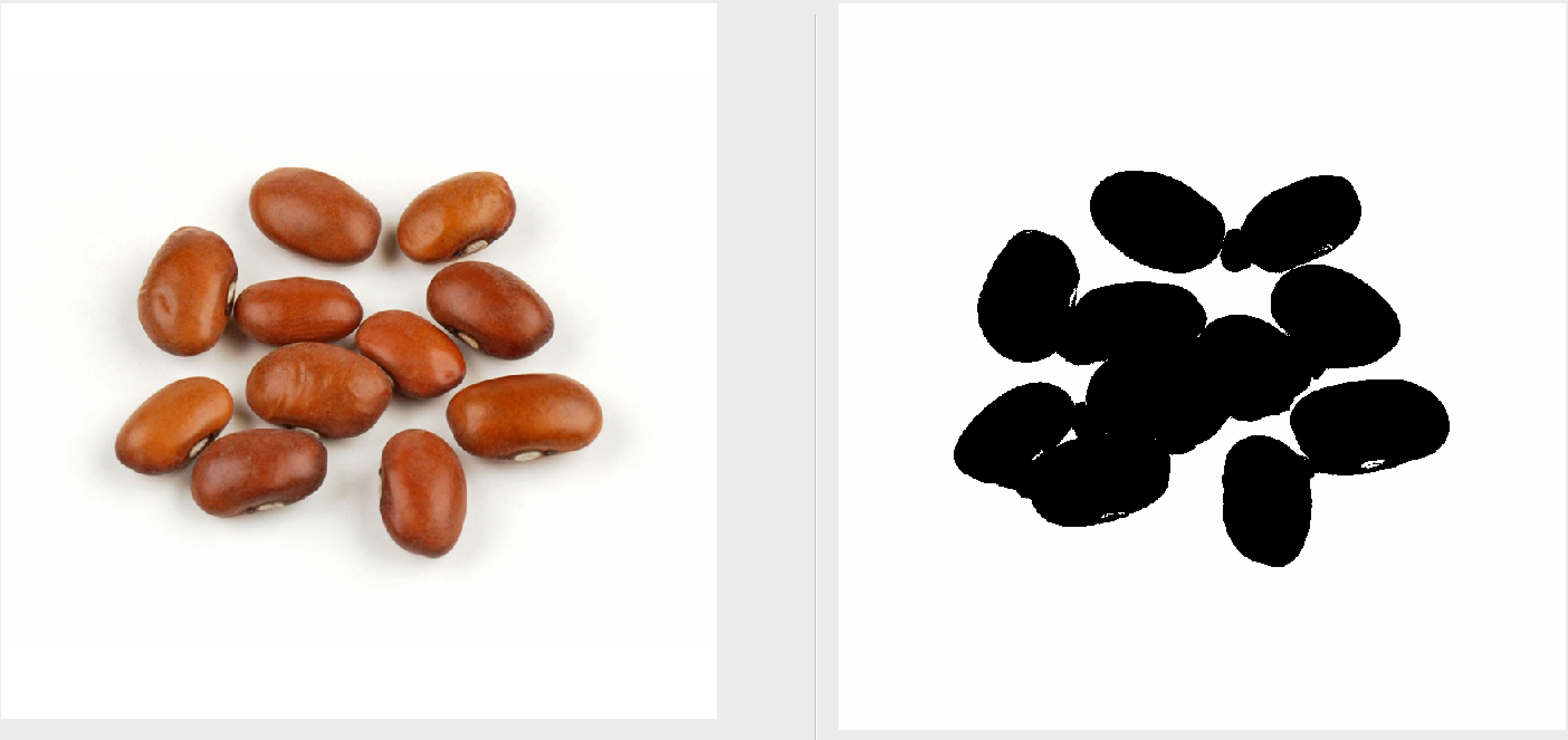

Applying a threshold

At this step we are reducing our grayscale image to a binary image.

In order to be able to see the resulting image, I am mapping 1 to 255 and keeping 0 unmodified.

The biggest problem at this step is to choose the level of brightness that will decide if a pixel will turn into 0 or 1.

We could just try something like this:

int threshold = 100;

if(pixelValue > threshold) {

pixelValue = 1;

} else {

pixelValue = 0;

}

But each image will require a specific threshold so that the important pixels would not be lost. The solution to this is to use Otsu's Binarization that automatically find a more suitable value.

Inverting the pixels

We want the white pixels (255) to represent the foreground, not background, so we just switch them

Mat inverseImage(Mat image) {

for(int i = 0; i < image.rows; i++) {

for(int j = 0; j < image.cols; j++) {

if( (int) image.at<uchar>(i,j) == ZERO ) {

image.at<uchar>(i,j) = ONE;

} else {

image.at<uchar>(i,j) = ZERO;

}

}

}

return image;

}

Erosion

The difference is subtle, basically we want to erode the foreground, so that we have more chances to separate the foreground objects.

A complete explanation on how erosion works can be found here: https://homepages.inf.ed.ac.uk/rbf/HIPR2/erode.htm

vector<vector<bool>> shouldBeZeroImage(image.rows, vector<bool>(image.cols, false));

for (int i = n / 2; i < image.rows - n / 2; i++) {

for (int j = m / 2; j < image.cols - m / 2; j++) {

// Loop the kernel

if ((int)image.at<uchar>(i, j) == ONE) {

bool shouldBeZero = false;

for (int crtX = i - n / 2, x = 0; crtX <= i + n / 2; crtX++, x++) {

for (int crtY = j - m / 2, y = 0; crtY <= j + m / 2; crtY++, y++) {

if ((int)image.at<uchar>(crtX, crtY) == ZERO && kernel[x][y] == 1) {

shouldBeZero = true;

break;

}

}

}

if (shouldBeZero) {

shouldBeZeroImage[i][j] = true;

}

}

}

}

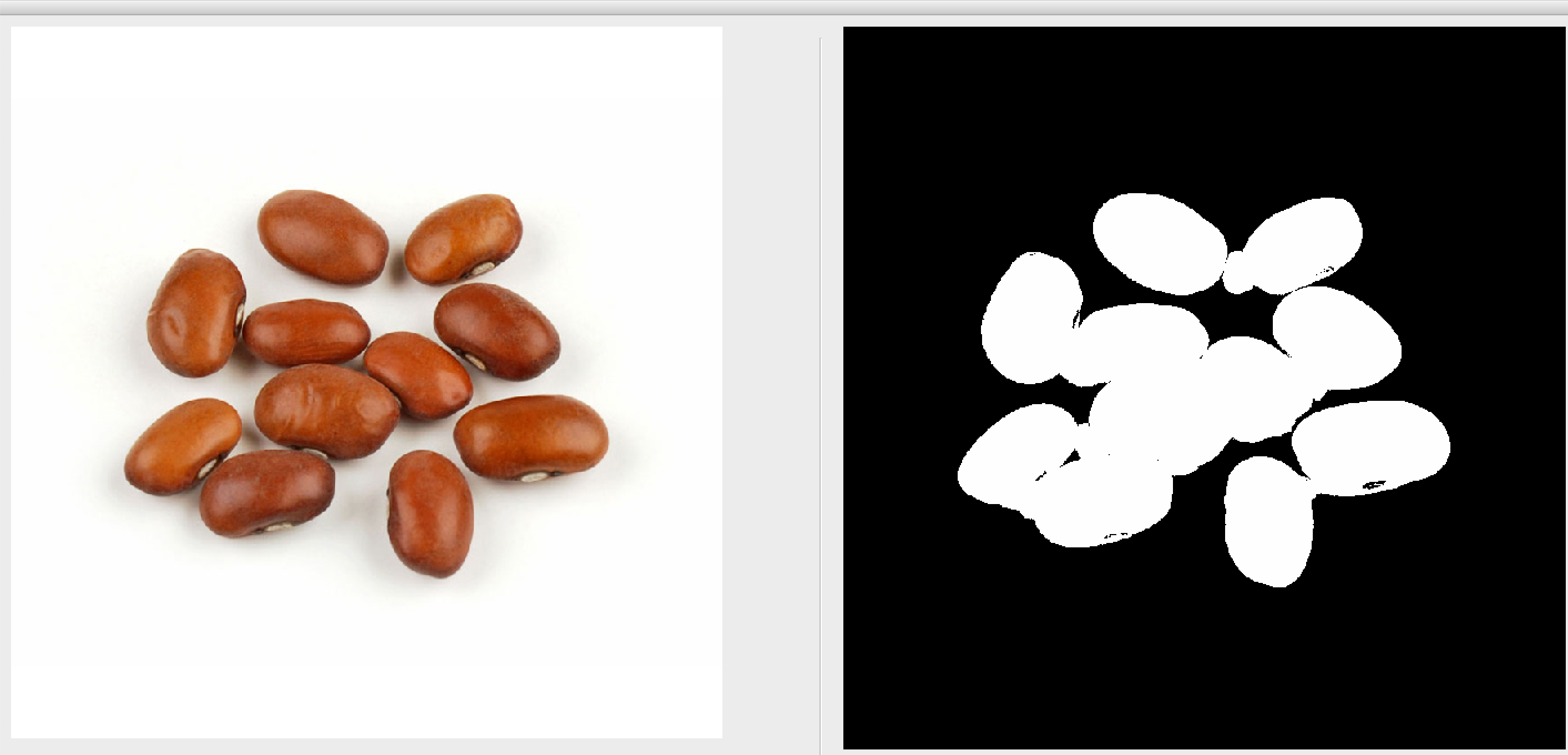

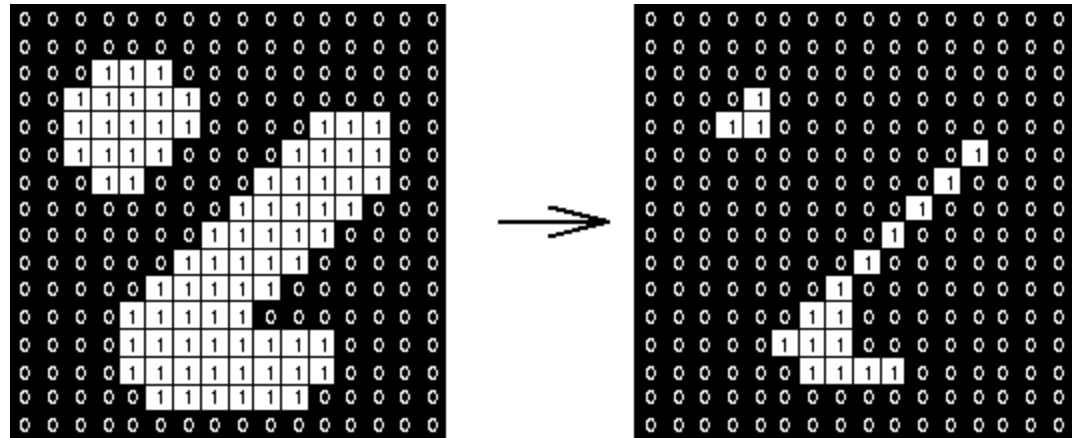

Distance transform

Until now we have a binary image, now we want to actually transform it into a height map so we can apply the actual watershed algorithm. Distance transform being one of the most straightforward ways to do it.

Basically a foreground pixel is more intense if it is further away from a background pixel. There are multiple ways to do it, I have used a path finding algorithm to compute this distance.

while (!qx.empty()) {

int crtX = qx.front();

qx.pop();

int crtY = qy.front();

qy.pop();

bool isBigger = true;

for (int h = 0; h < 8; h++) {

int nextX = crtX + dx[h];

int nextY = crtY + dy[h];

if (nextX < 0 || nextY < 0 || nextX >= image.rows || nextY >= image.cols || (int)image.at<uchar>(nextX, nextY) == ZERO)

continue;

}

if ((int)image.at<uchar>(crtX, crtY) <= (int)image.at<uchar>(nextX, nextY)) {

isBigger = false;

}

if ((int)image.at<uchar>(crtX, crtY) + STEP < (int)image.at<uchar>(nextX, nextY)) {

visited[nextX][nextY] = true;

image.at<uchar>(nextX, nextY) = (uchar)min((image.at<uchar>(crtX, crtY) + STEP), 254);

qx.push(nextX);

qy.push(nextY);

}

}

if (isBigger) {

markers.push_back(Point(crtX, crtY));

markerImage.at<Vec3b>(crtX, crtY) = {0, 255, 0};

}

}

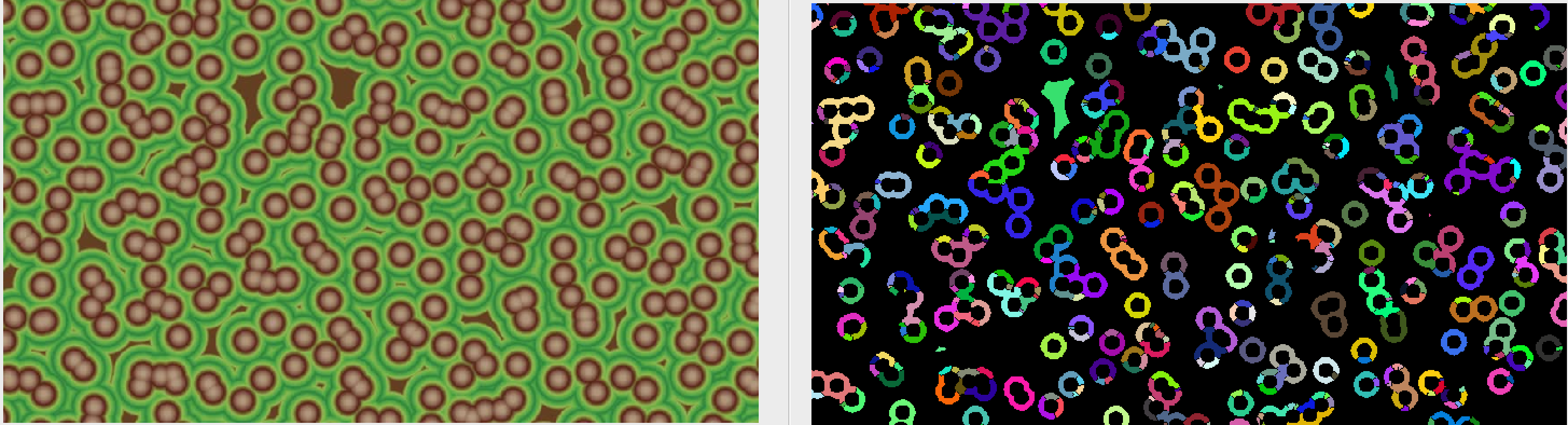

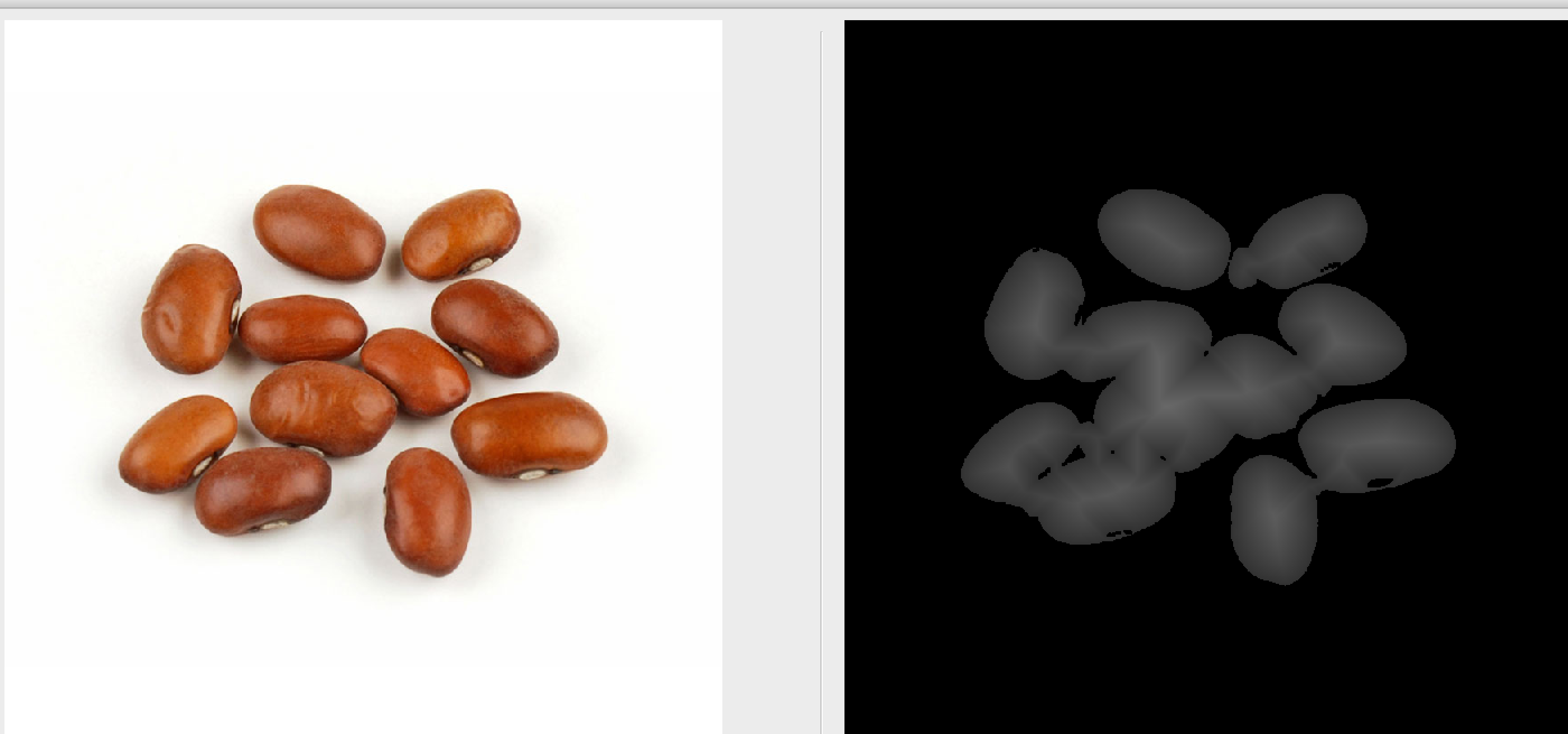

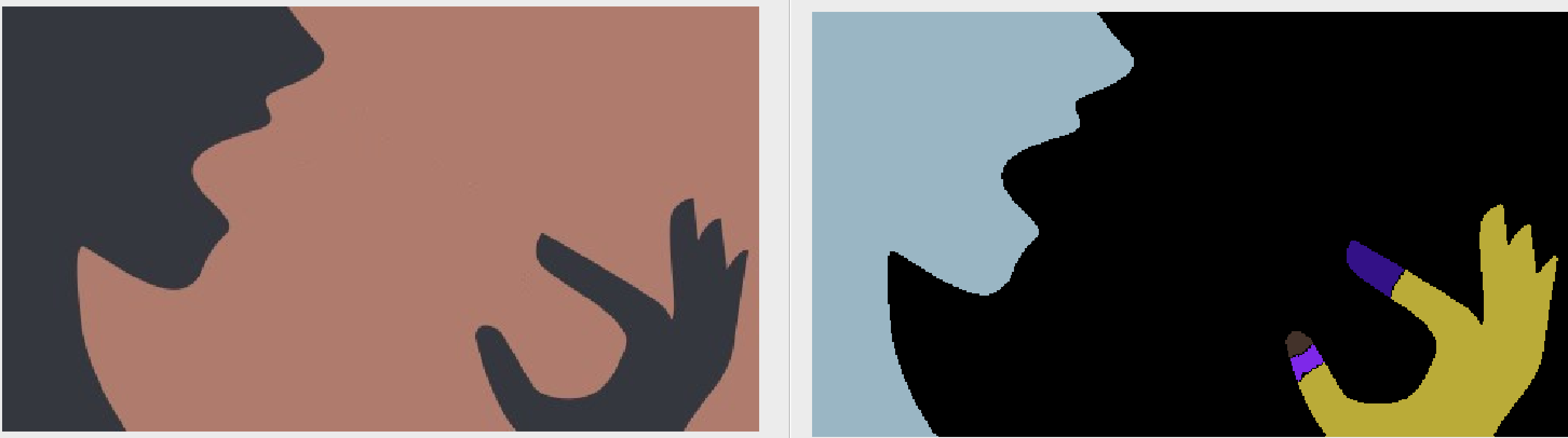

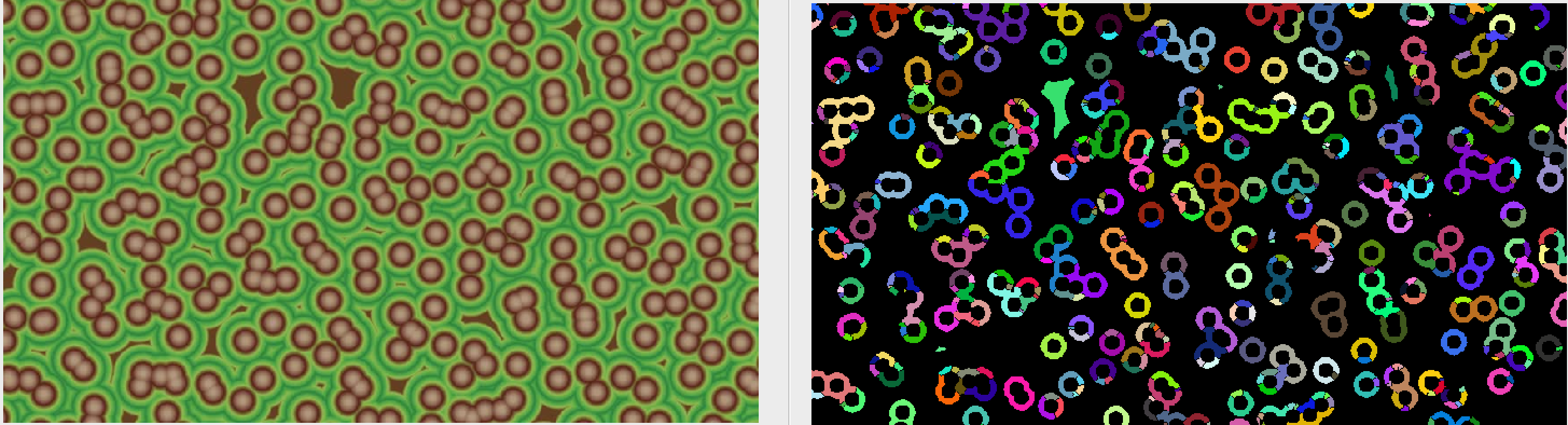

Actually, this generates a funny effect for this picture, I am calling it: The anatomy of a Jelly Bean

There are also other methods that achieve this step, they can be found here: http://www.ijsce.org/wp-content/uploads/papers/v4i1/A2060034114.pdf

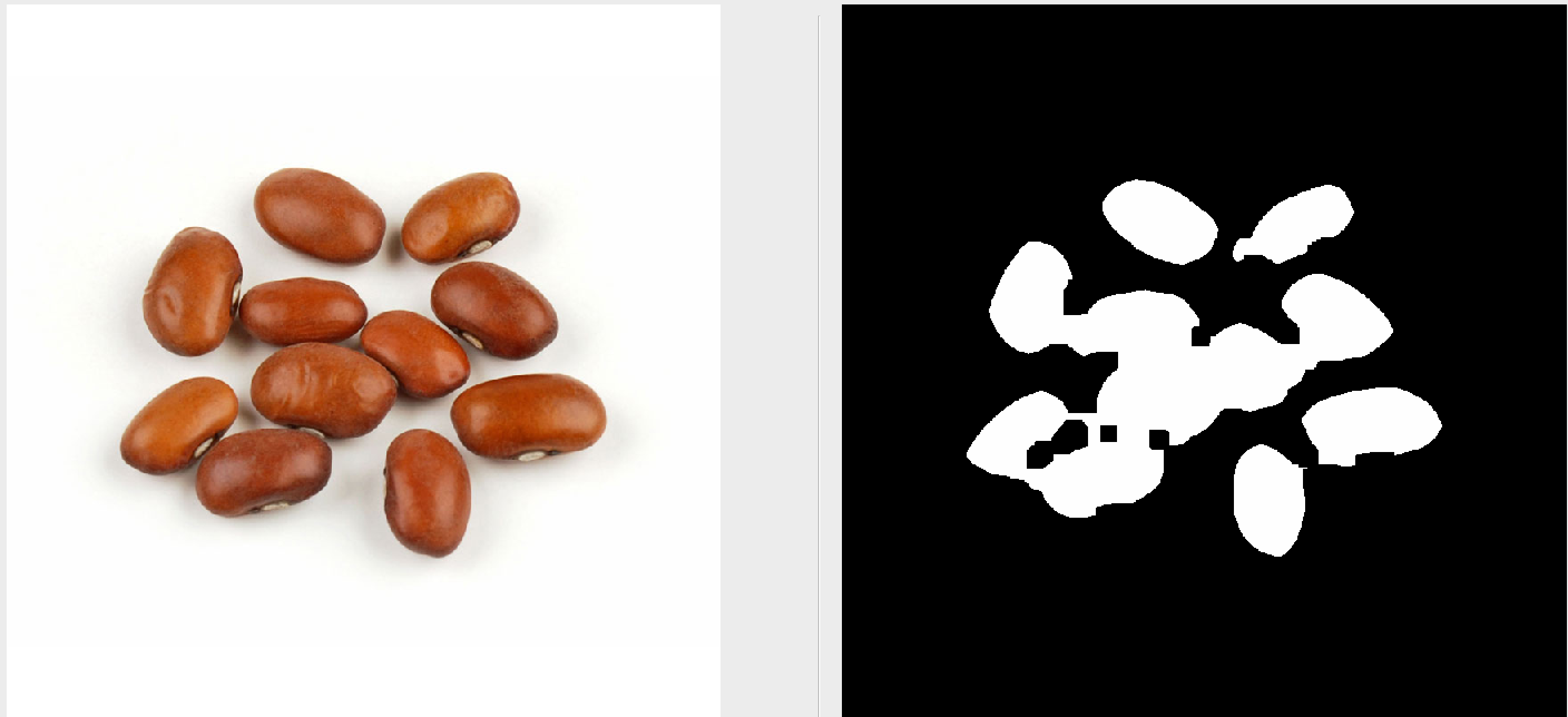

Watershed Algorithm

One last step before applying the watershed algorithm is to find the markers from where the flooding will begin, to many markers and the result will be over segmented, to few, and some regions will be missed.

My approach was to take the foreground points that are the furthest from the background, and start the flooding from there.

After that, just start the flooding process, and remember to create a wall (of empty pixels) between two adjacent flooding regions.

WaterShed logic:

- A set of markers, pixels where the flooding shall start, are chosen. Each is given a different label.

- The neighboring pixels of each marked area are inserted into a priority queue with a priority level corresponding to the gradient magnitude of the pixel.

- The pixel with the lowest priority level is extracted from the priority queue. If the neighbors of the extracted pixel that have already been labeled all have the same label, then the pixel is labeled with their label. All non-marked neighbors that are not yet in the priority queue are put into the priority queue.

- Redo step 3 until the priority queue is empty.

The non-labeled pixels are the watershed lines.

// Do the watershed

while (!pq.empty()) {

Pixel top = pq.top();

pq.pop();

bool canLabel = true;

int neighboursLabel = 0;

for (int i = 0; i < 4; i++) {

int nextX = top.x + dx[i];

int nextY = top.y + dy[i];

if (nextX < 0 || nextY < 0 || nextX >= image.rows || nextY >= image.cols) {

continue;

}

Pixel next = Pixel((int)image.at<uchar>(nextX, nextY), nextX, nextY);

// Must check if all surrounding marked have the same color

if (markerMap[next.x][next.y] != 0 && next.val != 0) {

if (neighboursLabel == 0) {

neighboursLabel = markerMap[next.x][next.y];

}

else {

if (markerMap[next.x][next.y] != neighboursLabel) {

canLabel = false;

}

}

}

else {

if (inPq[nextX][nextY] == false) {

inPq[next.x][next.y] = true;

pq.push(next);

}

}

}

if (canLabel) {

markerMap[top.x][top.y] = neighboursLabel;

}

}

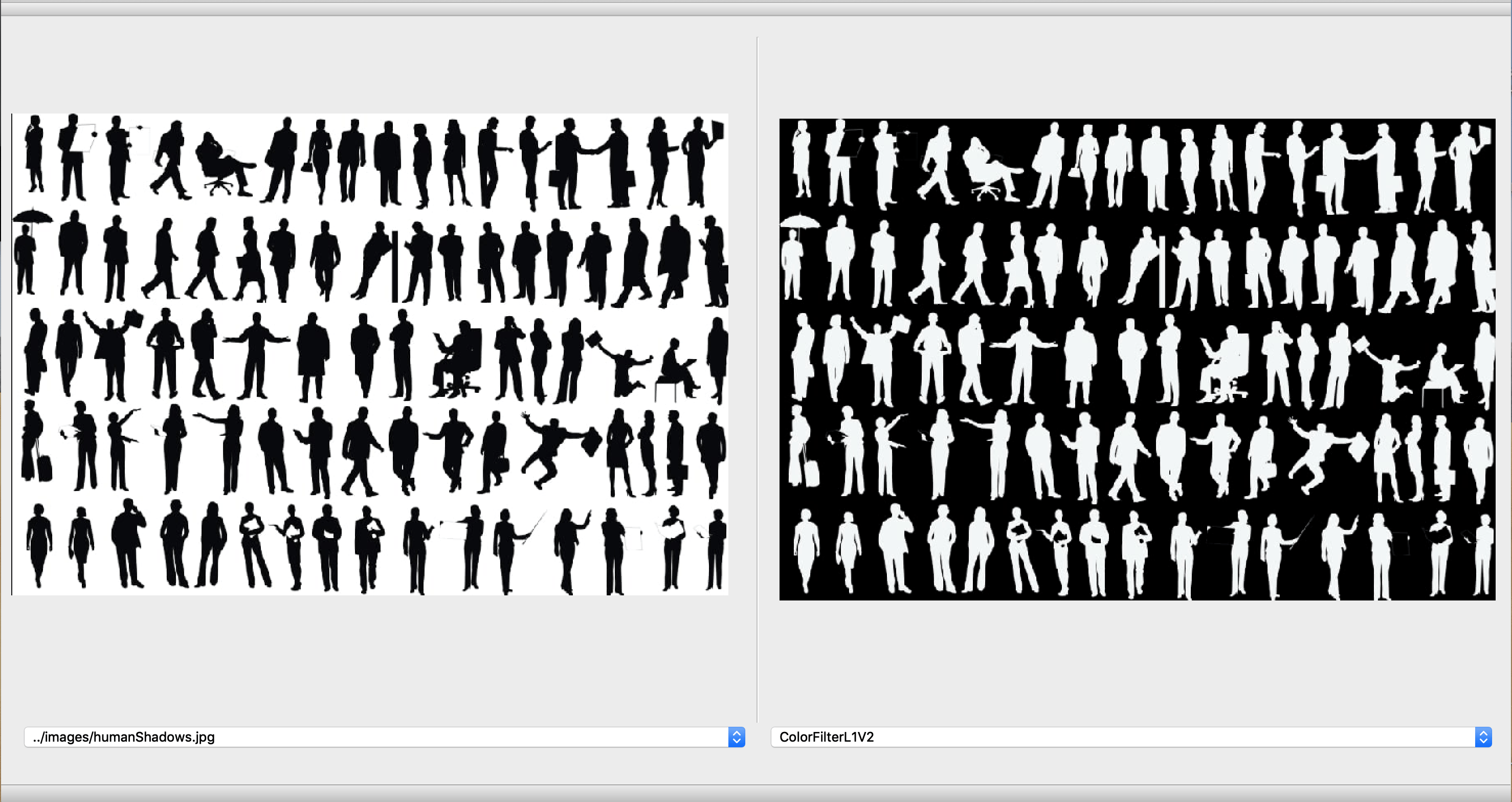

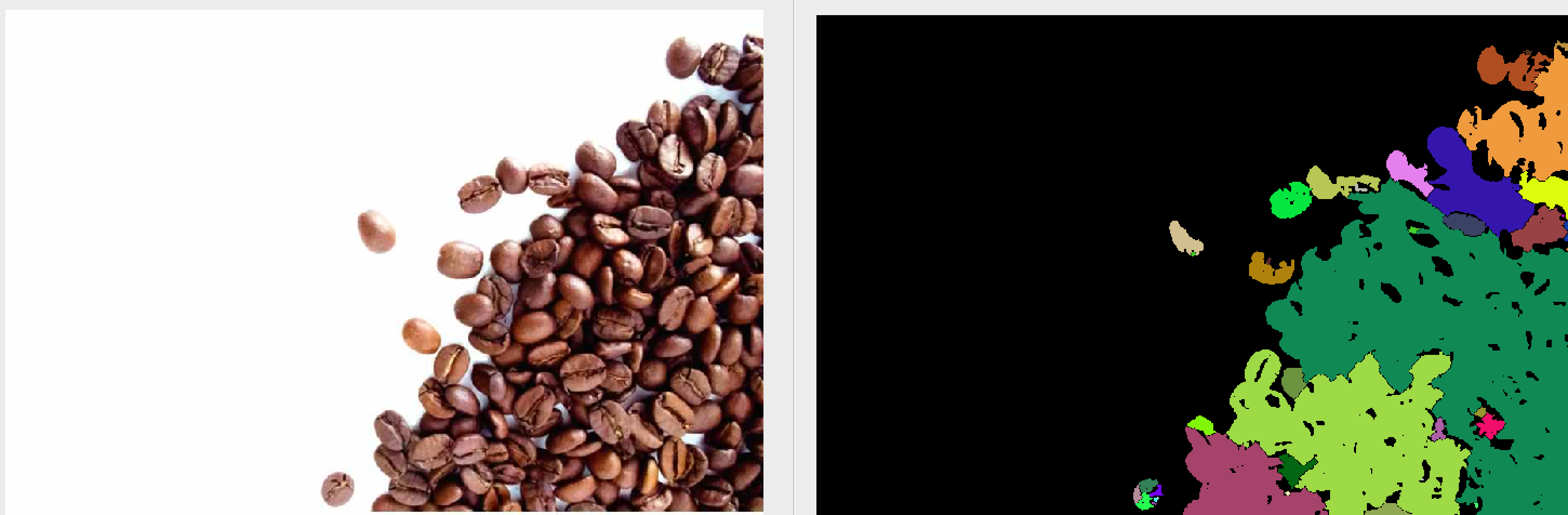

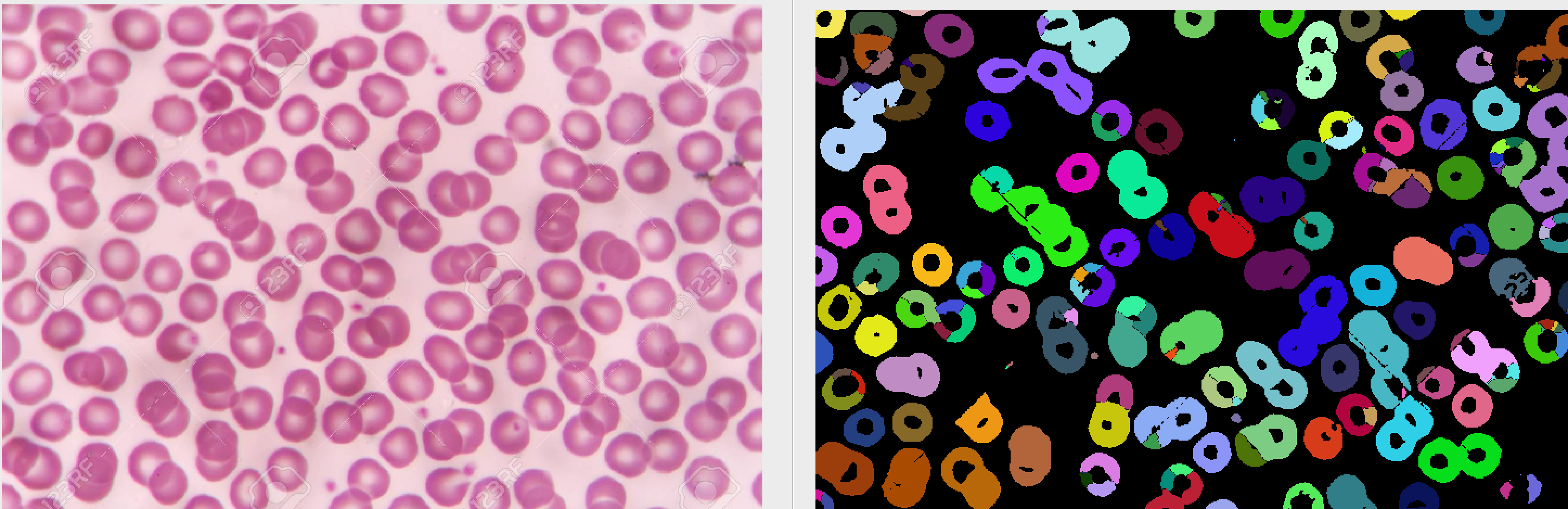

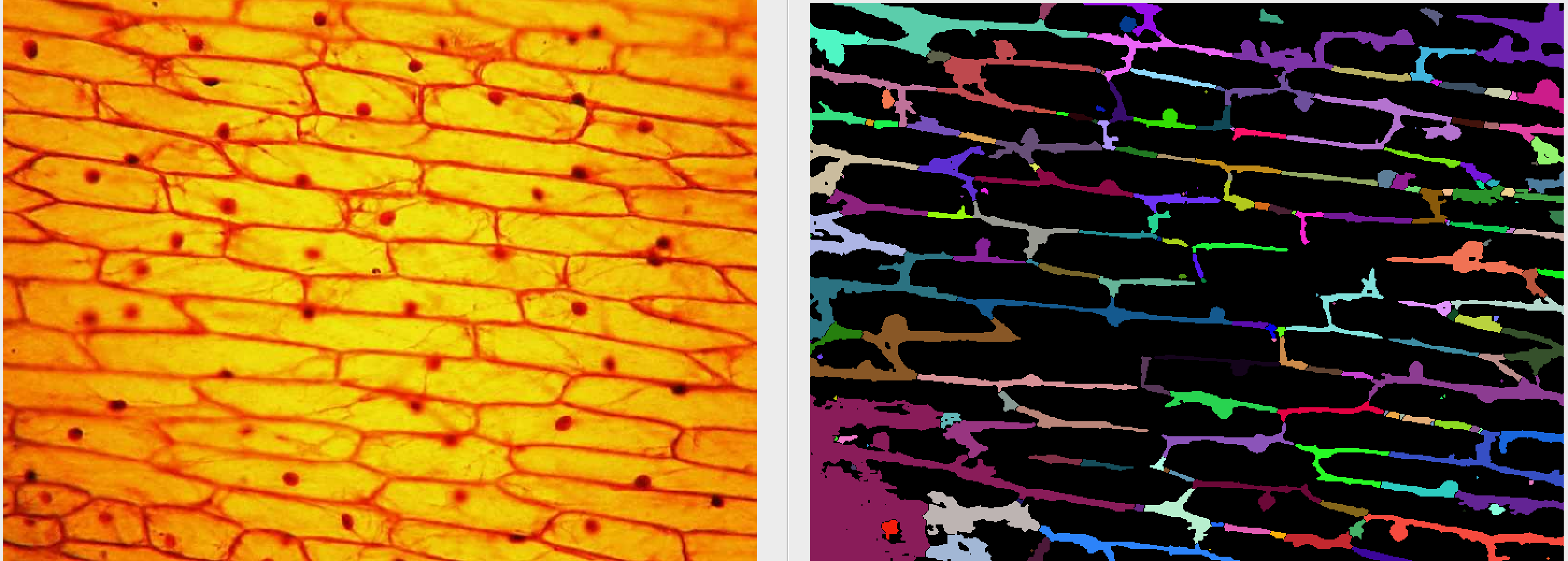

Other results:

Code

References

- http://www.ijsce.org/wp-content/uploads/papers/v4i1/A2060034114.pdf

- http://www.cmm.mines-paristech.fr/~beucher/wtshed.html

- https://en.m.wikipedia.org/wiki/Watershed_(image_processing)

- https://stackoverflow.com/questions/11294859/how-to-define-the-markers-for-watershed-in-opencv

- https://www.cs.auckland.ac.nz/courses/compsci773s1c/lectures/ImageProcessing-html/topic4.htm

- https://homepages.inf.ed.ac.uk/rbf/HIPR2/erode.htm

- https://homepages.inf.ed.ac.uk/rbf/HIPR2/dilate.htm